Framepack AI vs. Narrow AI

Framepack AI

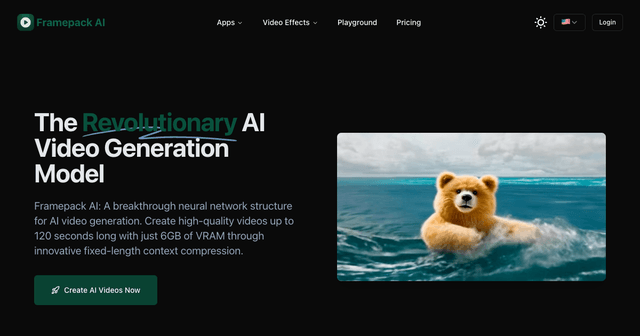

# Framepack AI: The Revolutionary AI Video Generation Model Framepack AI is a breakthrough neural network structure for AI video generation. It employs innovative "next frame prediction" technology combined with a unique fixed-length context compression mechanism, enabling users to generate high-quality, high-framerate (30fps) videos up to 120 seconds long with very low hardware barriers (requiring only consumer-grade NVIDIA GPUs with 6GB of VRAM). ## What Makes Framepack AI Unique? The core innovation of Framepack AI lies in its **fixed-length context compression** technology. In traditional video generation models, context length grows linearly with video duration, leading to a sharp increase in VRAM and computational resource demand. Framepack AI effectively solves this challenge by intelligently evaluating the importance of input frames and compressing this information into fixed-length context 'notes'. This significantly reduces the demand for VRAM and computational resou...

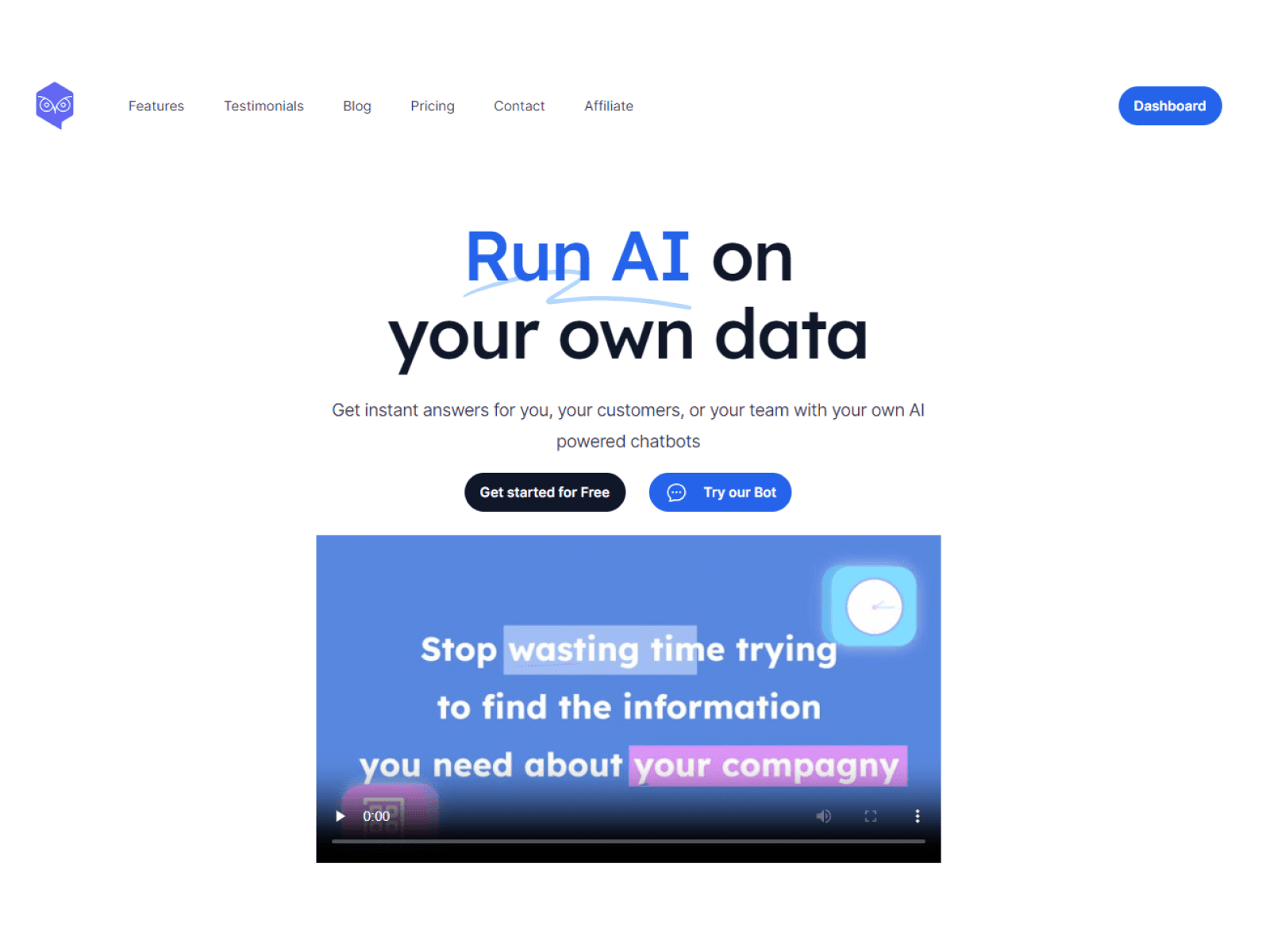

Narrow AI

Introducing Narrow AI: Take the Engineer out of Prompt Engineering Narrow AI autonomously writes, monitors, and optimizes prompts for any model - so you can ship AI features 10x faster at a fraction of the cost. Maximize quality while minimizing costs - Reduce AI spend by 95% with cheaper models - Improve accuracy through Automated Prompt Optimization - Achieve faster responses with lower latency models Test new models in minutes, not weeks - Easily compare prompt performance across LLMs - Get cost and latency benchmarks for each model - Deploy on the optimal model for your use case Ship LLM features 10x faster - Automatically generate expert-level prompts - Adapt prompts to new models as they are released - Optimize prompts for quality, cost and speed Learn more at getnarrow.ai

Reviews

Reviews

| Item | Votes | Upvote |

|---|---|---|

| No pros yet, would you like to add one? | ||

| Item | Votes | Upvote |

|---|---|---|

| No cons yet, would you like to add one? | ||

| Item | Votes | Upvote |

|---|---|---|

| Automated Model Migration | 1 | |

| Intelligent Cost & Performance Optimization | 1 | |

| Continuous Performance Monitoring | 1 |

| Item | Votes | Upvote |

|---|---|---|

| No cons yet, would you like to add one? | ||

Frequently Asked Questions

Framepack AI is specifically designed for AI video generation, utilizing fixed-length context compression to efficiently create high-quality videos with minimal hardware requirements. In contrast, Narrow AI focuses on optimizing prompts for various AI models, which may not directly relate to video generation. Therefore, if your primary goal is video creation, Framepack AI would be the better choice.

Narrow AI is designed to reduce AI spending significantly by optimizing prompts and utilizing cheaper models, which can lead to cost efficiency in AI feature deployment. However, Framepack AI's focus is on video generation rather than cost optimization for AI models. If cost efficiency in AI operations is your priority, Narrow AI may be more beneficial, but it does not directly compete with Framepack AI's video generation capabilities.

Narrow AI is specifically designed to enable rapid deployment of AI features by automating prompt generation and optimization, allowing for faster shipping of AI functionalities. Framepack AI, while innovative in video generation, does not focus on the deployment of AI features in the same way. Therefore, for rapid deployment, Narrow AI is the more suitable option.

Framepack AI is open-source and designed to be accessible for users with consumer-grade hardware, making it user-friendly for those interested in video generation. Narrow AI, while also user-friendly, targets a different audience focused on prompt engineering and optimization. The user-friendliness of each tool depends on the specific needs and expertise of the user.

Framepack AI is a revolutionary AI video generation model that utilizes a unique 'next frame prediction' technology along with fixed-length context compression. This allows users to create high-quality videos at 30 frames per second (fps) for up to 120 seconds, all while requiring only consumer-grade NVIDIA GPUs with 6GB of VRAM.

Key features of Framepack AI include fixed-length context compression to reduce VRAM requirements, minimal hardware requirements (NVIDIA RTX 30XX, 40XX, or 50XX series GPUs), efficient frame generation at approximately 2.5 seconds per frame, strong anti-drift capabilities for consistent video quality, support for multiple attention mechanisms, and being open-source and free.

Framepack AI requires an NVIDIA RTX 30XX, 40XX, or 50XX series GPU with at least 6GB of VRAM. It is compatible with both Windows and Linux operating systems and supports FP16 and BF16 data formats.

Framepack AI generates frames efficiently at approximately 2.5 seconds per frame on RTX 4090 desktop GPUs. With optimizations like teacache, this can be reduced to 1.5 seconds per frame, making the video generation process faster and more efficient.

Framepack AI was developed by Lvmin Zhang, the creator of ControlNet, and Maneesh Agrawala, a professor at Stanford University. It is a fully open-source project with its code and models available on GitHub.

You can download Framepack AI from its official GitHub repository. It can be used as a standalone application or integrated with platforms like ComfyUI. Additionally, the community has created a Framepack plugin for easy usage.

The pros of Narrow AI include Automated Model Migration, Intelligent Cost & Performance Optimization, and Continuous Performance Monitoring. There are currently no user-generated cons listed for Narrow AI.

Narrow AI is a platform that autonomously writes, monitors, and optimizes prompts for any model, allowing users to ship AI features 10 times faster and at a fraction of the cost. It aims to maximize quality while minimizing costs, reduce AI spend by 95% with cheaper models, improve accuracy through Automated Prompt Optimization, and achieve faster responses with lower latency models.

Narrow AI offers several features including Automated Model Migration, Intelligent Cost & Performance Optimization, Continuous Performance Monitoring, and Automated Prompt Optimization. It also allows users to easily compare prompt performance across different LLMs, get cost and latency benchmarks for each model, and deploy on the optimal model for their use case.

Narrow AI helps reduce AI costs by up to 95% through the use of cheaper models and optimizing prompts for quality, cost, and speed. This allows users to achieve high accuracy and fast responses without incurring significant expenses.

Narrow AI optimizes prompt performance through Automated Prompt Optimization, which adjusts prompts to improve accuracy, reduce latency, and lower costs. It continuously monitors performance and adapts prompts to new models as they are released, ensuring optimal performance.