LLMWise

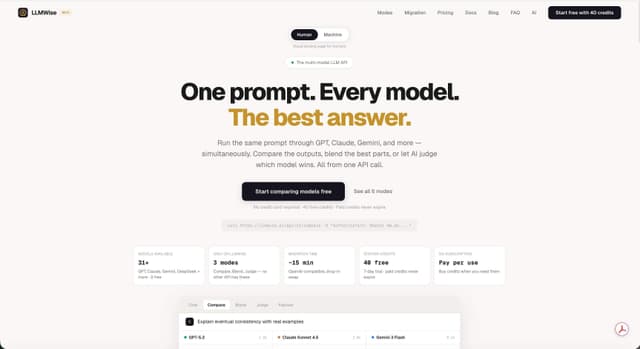

Stop managing multiple AI providers. Start using the best model for every task. LLMWise gives you one API to access every major LLM — OpenAI, Anthropic, Google, Meta, xAI, and DeepSeek — with intelligent routing that matches each prompt to the right model. Smart routing: Send a prompt, LLMWise picks the optimal model. Code goes to GPT, creative writing to Claude, translation to Gemini. Compare & blend: Run prompts across models side-by-side. Blend combines outputs into one stronger answer. Judge mode lets models evaluate each other. Always resilient: Circuit-breaker failover reroutes to backup models when a provider goes down. Your app never breaks. Test & optimize: Benchmark suites, batch tests, optimization policies for speed, cost, or reliability, and automated regression checks. Bring your own keys: Use existing API keys at provider prices, or pay per use with LLMWise credits. No subscriptions. Built for developers who want the best AI for every task without the complexity.

Reviews

| Item | Votes | Upvote |

|---|---|---|

| No pros yet, would you like to add one? | ||

| Item | Votes | Upvote |

|---|---|---|

| No cons yet, would you like to add one? | ||

LLMWise is a platform that allows users to manage multiple AI providers through a single API. It provides access to major language models such as OpenAI, Anthropic, Google, Meta, xAI, and DeepSeek, with intelligent routing that matches each prompt to the most suitable model for the task.

LLMWise offers several key features including smart routing to select the optimal model for each prompt, the ability to compare and blend outputs from different models, resilience through circuit-breaker failover to backup models, testing and optimization tools, and the option to bring your own API keys or pay per use without subscriptions.

Pros of LLMWise include its ability to streamline access to multiple AI models, intelligent routing for optimal task performance, and resilience against provider outages. However, there are currently no user-generated cons available for LLMWise.

LLMWise is built for developers who want to leverage the best AI models for various tasks without the complexity of managing multiple providers. It simplifies the process of integrating AI into applications.

LLMWise includes a circuit-breaker failover feature that automatically reroutes requests to backup models if a primary provider goes down, ensuring that applications remain operational.